Elon Musk’s AI Group Has Set Up a 'Gym' to Train Bots

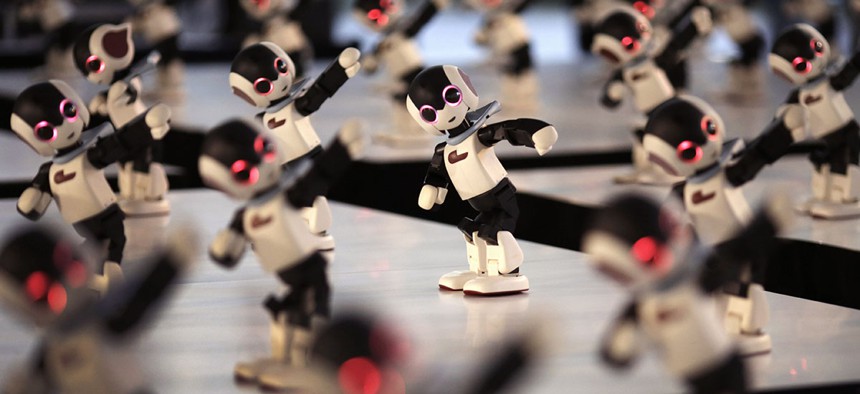

Eugene Hoshiko/AP

OpenAI Gym is meant to be used as a benchmarking tool for artificial intelligence programs.

Earlier this week, OpenAI, the nonprofit research group with billion-dollar backing from Elon Musk and other tech luminaries, launched its first program. It’s called OpenAI Gym, and it’s meant to be used as a benchmarking tool for artificial intelligence programs.

Musk once said he thought truly artificial intelligent agents could be more harmful to the human race than nuclear weapons. When OpenAI was launched in December, its stated goal was to “advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.” Which sounds a lot like an AI version of Google’s long-held mantra: “Don’t be evil.”

OpenAI Gym is meant to standardize testing for AI systems: If everyone is using the same benchmark tests, there shouldn’t be any sort of unintended consequences, like the destruction of the human race, or AI programs achieving the singularity and leaving us to go do their own thing on the Internet.

The group has some of the most well-respected minds in the field working on its “gym,” including former Google AI researcher Ilya Sutskever, Stripe’s former technical officer Greg Brockton, and computer scientists Andrej Karpathy, and Wojciech Zaremba, who has worked on Google and Facebook’s AI research programs.

The software works as a “gym” by being not just one test. Researchers submitting their programs will run their code through a range of different scenarios to see how it holds up. According to OpenAI’s blog post, programs in its gym can play classic Atari games like Pong and the board game Go—both of which Google’s AI research divisions have been tackling for a few years—control a robot walking in 2-D and 3-D environments, and compute equations from examples (rather than being told the mathematical rules), among other tasks. Theoretically, researchers can then see how their programs did, and publish their benchmarks for others to see.

Despite decades of talk and research, AI has yet to become generally useful. In most cases, ranging from IBM’s Deep Blue computer beating Garry Kasparov at chess to those robots that aren’t very good at playing soccer, programs and bots have been trained extensively on data that has allowed them to be very good at completing one task. While they may be superhuman at what they were programmed for, if you played Deep Blue in checkers, you’d win. Getting programs or robots or chatbots or self-driving cars to adapt to new situations is far more difficult.

OpenAI Gym could help researchers build AI software that generally works and allows others to follow and show they’ve built something stable. As Popular Science’s Dave Gershgorn puts it: “In any scientific arena, good research is able to be replicated.”

OpenAI’s benchmarking program may not catch on, but it is being built by researchers with strong ties to the AI community who are dedicated to making the technology useful. As Wired’s Cade Metz pointed out in a recent profile on the group, they are concerned “that if people can build AI that can do great things, then they can build AI that can do awful things, too.” By keeping everyone’s research out in the open, through tools like this, the hope is there’s less chance of anything nefarious happening.